Ordinary least squares, OLS¶

OLS is at the core of econometrics curriculum, it is easily derived and highly practical to familiarise a learner with regression possibilites and limitations.

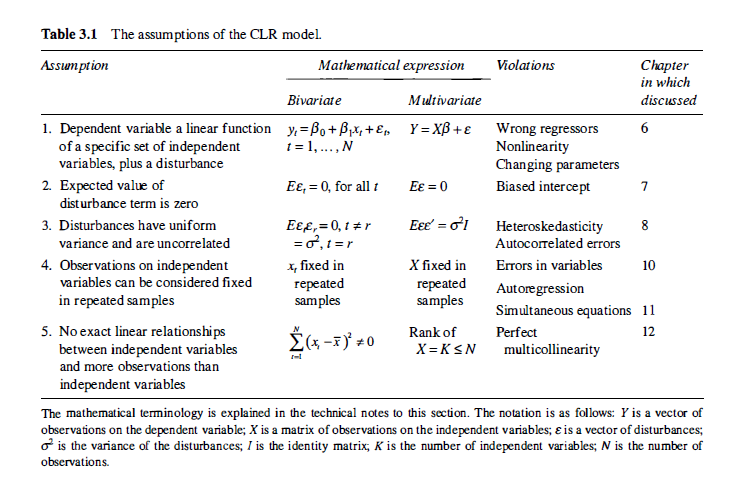

The usual way to teach OLS is to present assumptions and show how to deal with their violations as indicated below in a review chart from Kennedy’s textbook.

Math:

\(Y = \beta X + \epsilon\), \(\epsilon\) is iid, normal with finite variance.

Common steps:

specify model: select explanatory variables, transform them if needed

estimate coefficients

elaborate on model quality (the hardest part)

go to 1 if needed

know what model does not show (next hardeer part)

What may go wrong:

residuals are not random

variables are cointegrated

multicollinearity in regressors

residuals depend on x (heteroscedasticity)

inference is not causality

wrong signs, insignificant coefficients

variable normalisation was not described

Discussion:

why sum of squares as a loss function?

connections to bayesian estimation

is R2 useful or dangerous?

Implementations:

julia Alistair, GLM.jl, Regression.jl

check unsorted links about OLS - but it is not better than googling on your own

Baby dragon special: